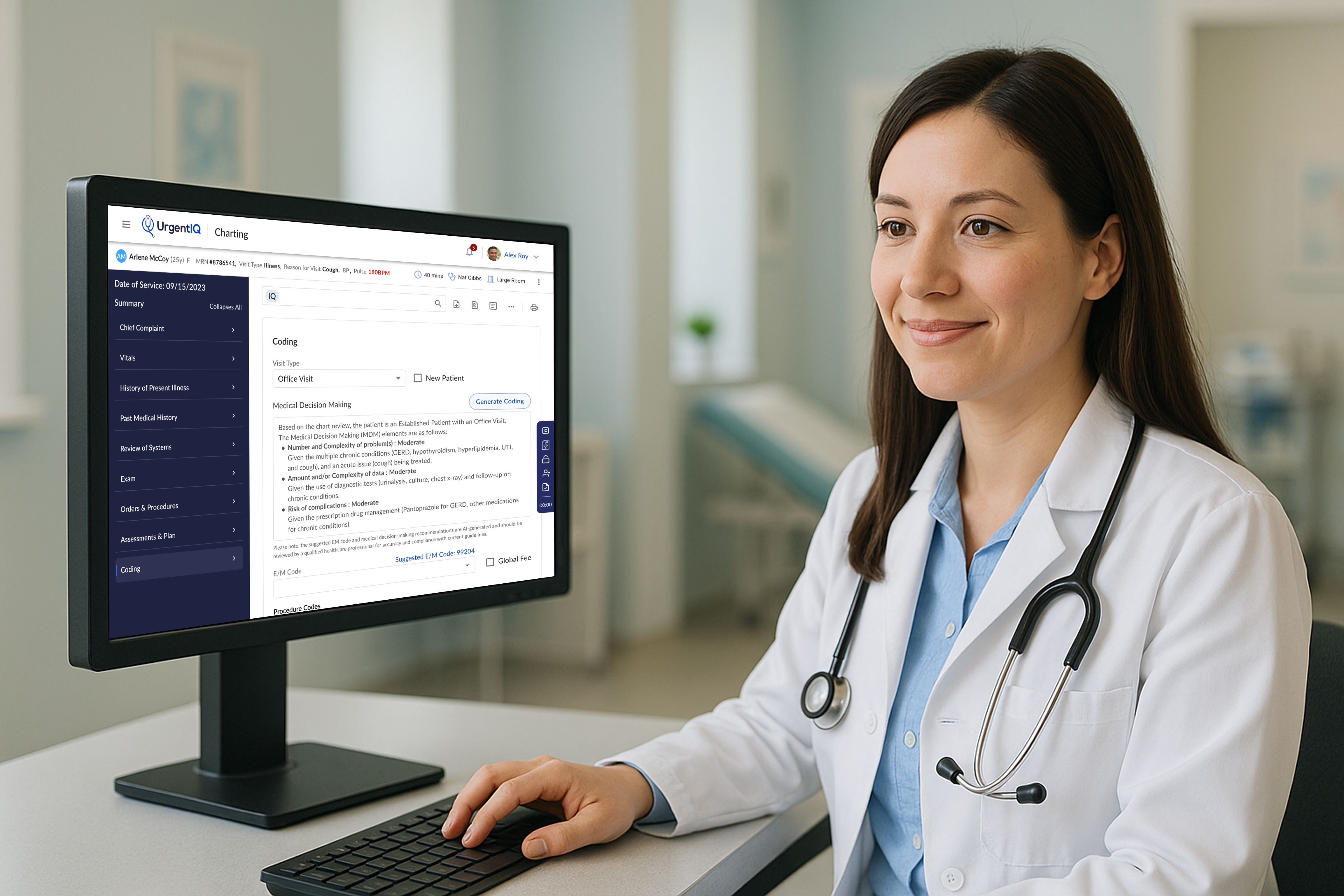

About UrgentIQ

UrgentIQ is a New York-based health tech startup founded in 2022, dedicated to transforming urgent care through intelligent, AI-powered software.

In the post-pandemic healthcare landscape, urgent care clinics have become increasingly vital, helping to ease pressure on hospitals when primary care isn't available. UrgentIQ aims to meet this need by building a modern EMR (Electronic Medical Record) system tailored specifically for the fast-paced demands of urgent care. The platform streamlines clinical documentation and reduces time spent per patient—enabling clinics to see more patients and deliver higher-quality care.

Project Background

With a lean team of just 12 employees, I joined UrgentIQ as the Product and Design Lead. Among many initiatives, I led the development of the EM Coding automation feature—leveraging AI to reduce charting time and improve coding accuracy.

What is EM Coding and Why It Matters

Evaluation and Management (E/M) coding is a standardized system used in medical documentation and billing to classify the complexity of patient visits. It determines how healthcare providers are reimbursed for services like consultations, office visits, and patient evaluations. These codes are guided by criteria set by the American Medical Association (AMA) and the Centers for Medicare & Medicaid Services (CMS), with a strong emphasis on the provider's medical decision-making (MDM).

Accurate EM coding is essential not only for clinical documentation but also for ensuring proper reimbursement. For urgent care clinics—where providers see a high volume of varied cases daily—this accuracy directly impacts revenue. Undercoding can lead to lost income, while overcoding can result in audits and legal risks.

For UrgentIQ, automating and improving EM coding represented a critical opportunity: it would reduce administrative burden on providers, improve coding accuracy, and increase clinic revenue by helping ensure providers are appropriately reimbursed for the care they deliver.

My Role

As the Product and Design Lead, I was responsible for end-to-end ownership of the EM Coding feature—from initial discovery through to launch and iteration. This included:

- Collaborating closely with clinical advisors and engineers to understand the nuances of E/M guidelines and provider workflows

- Mapping out user journeys and identifying key decision points for automation

- Designing the interaction model, UI patterns, and review mechanisms for provider transparency and trust

- Facilitating design reviews, usability testing, and gathering feedback from real clinic users

- Defining success metrics and partnering with engineering to ship incrementally, balancing speed with compliance

Project Goal

Redesign the EM coding experience in UrgentIQ to reduce provider time spent in charting and reduce under coding of clinic visits.

Research

To design an EM coding assistant that providers would trust and actually use, we first needed to understand the complexities of the coding system, current documentation workflows, and real-world pain points faced by urgent care clinicians.

1. Clinical Landscape & Coding Guidelines

I began by diving into official EM coding documentation from the AMA and CMS to fully understand the components that determine code levels—especially Medical Decision-Making (MDM), which determines that final EM Code. I created documentation to explain to engineers how the EM Code is calculated based on problems addressed, data reviewed, and risk of complications. I also found that the rubric was very straightforward and the majority of E/M coding decisions could be predicted based on structured data already captured during charting.

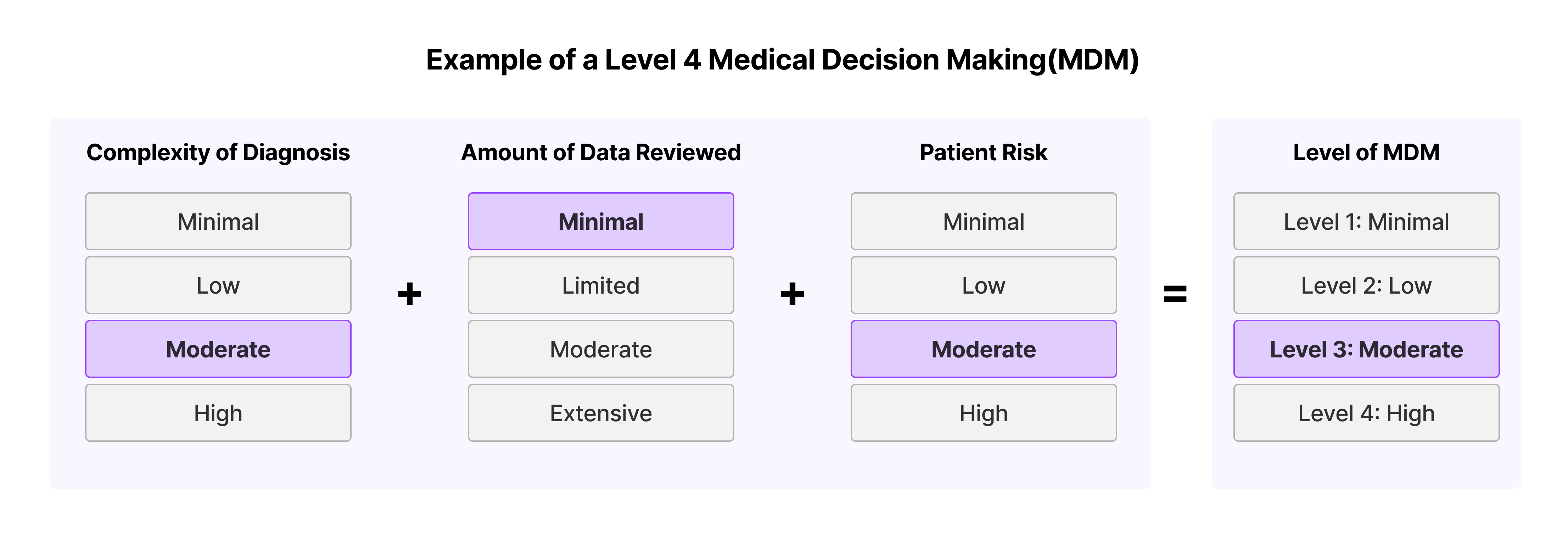

The MDM is split into 3 components:

- The complexity of diagnoses

- Data reviewed

- The risk associated with a patient's condition

Each component gets a rating: min, low, moderate or high. Once you have 2 components you can throw out the highest value and take the next one. This goes for 3 components as well. For example, if Complexity of Diagnosis is a level 3, Risk is a level 3 and Data is a level 2, the MDM would be Level 3.

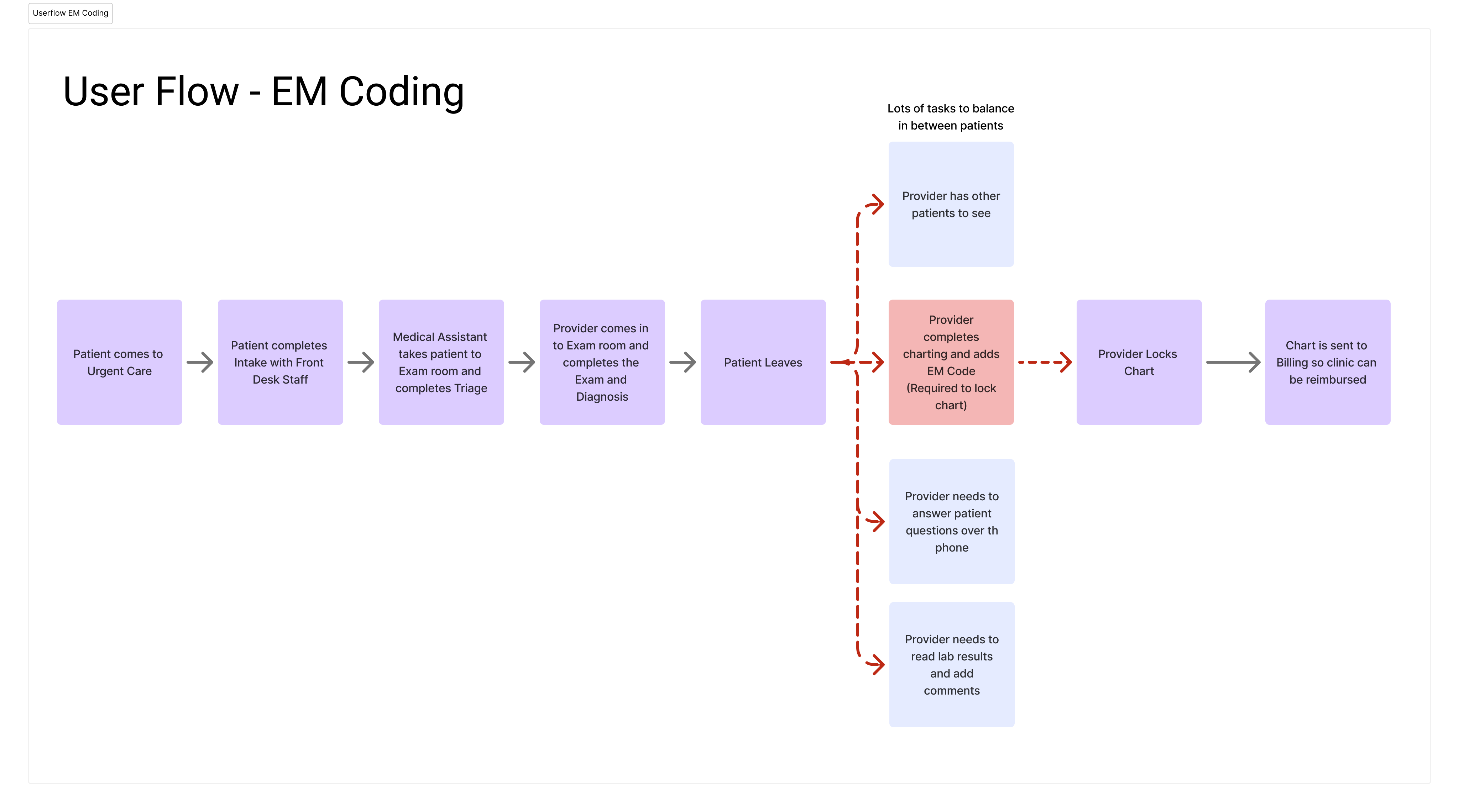

2. Workflow Analysis with Providers

I knew providers would be the primary users of the EM coding assistant so I spoke to providers using our EMR as well as clinical advisors and reviewed annotated charts to understand current pain points.

Key findings:

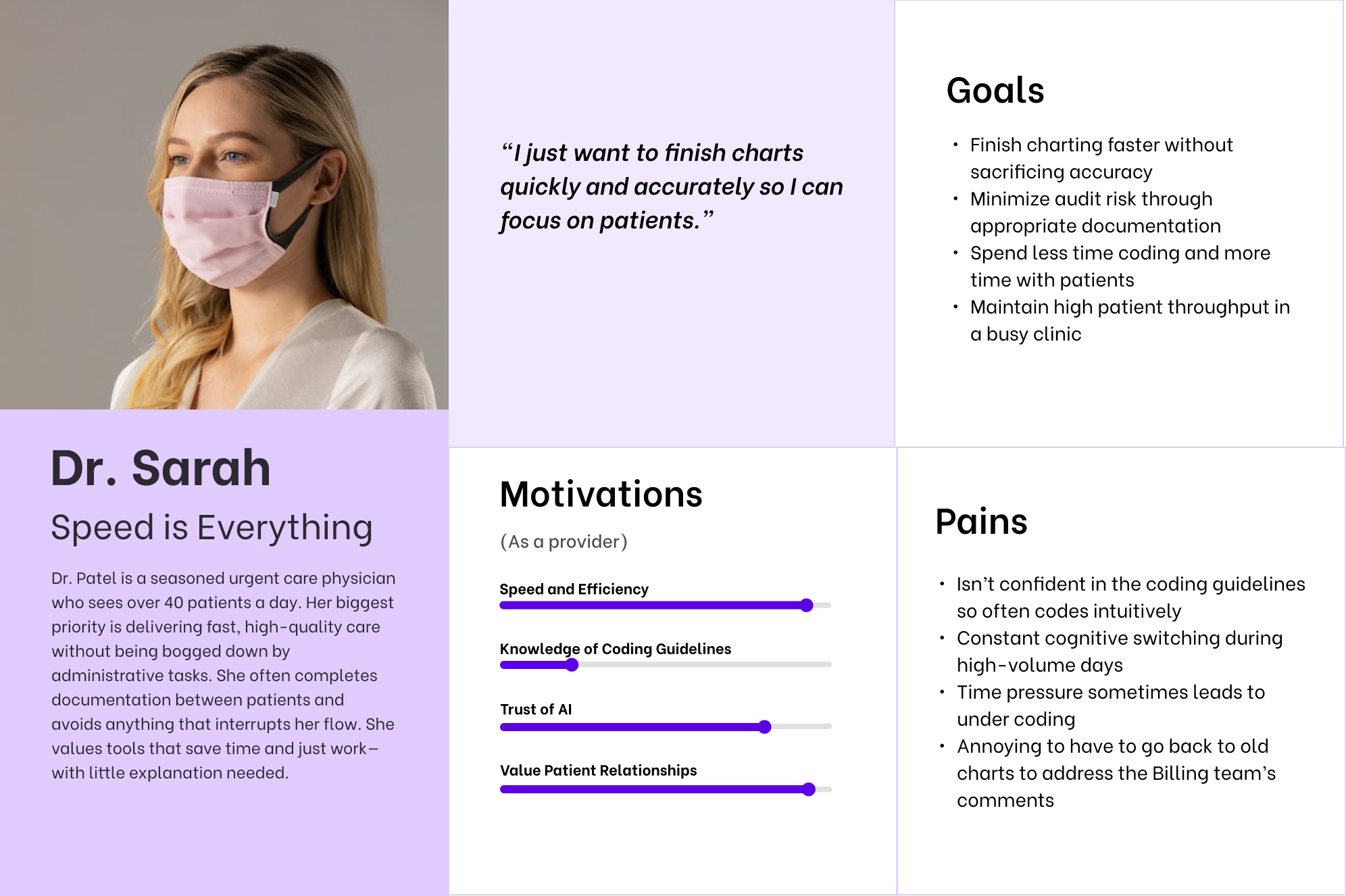

- Many providers defaulted to lower-level codes out of caution or time pressure

- Most providers don't understand the MDM rubric and often rely on intuition which can lead to under coding

- Critical coding elements were often buried across multiple parts of the chart (e.g., diagnosis severity, labs ordered, risk factors)

- There was a lack of real-time feedback or suggestions, making accurate coding a time-consuming, error-prone task

- Providers were open to automation, but only if they retained final control and had visibility into how the code was generated

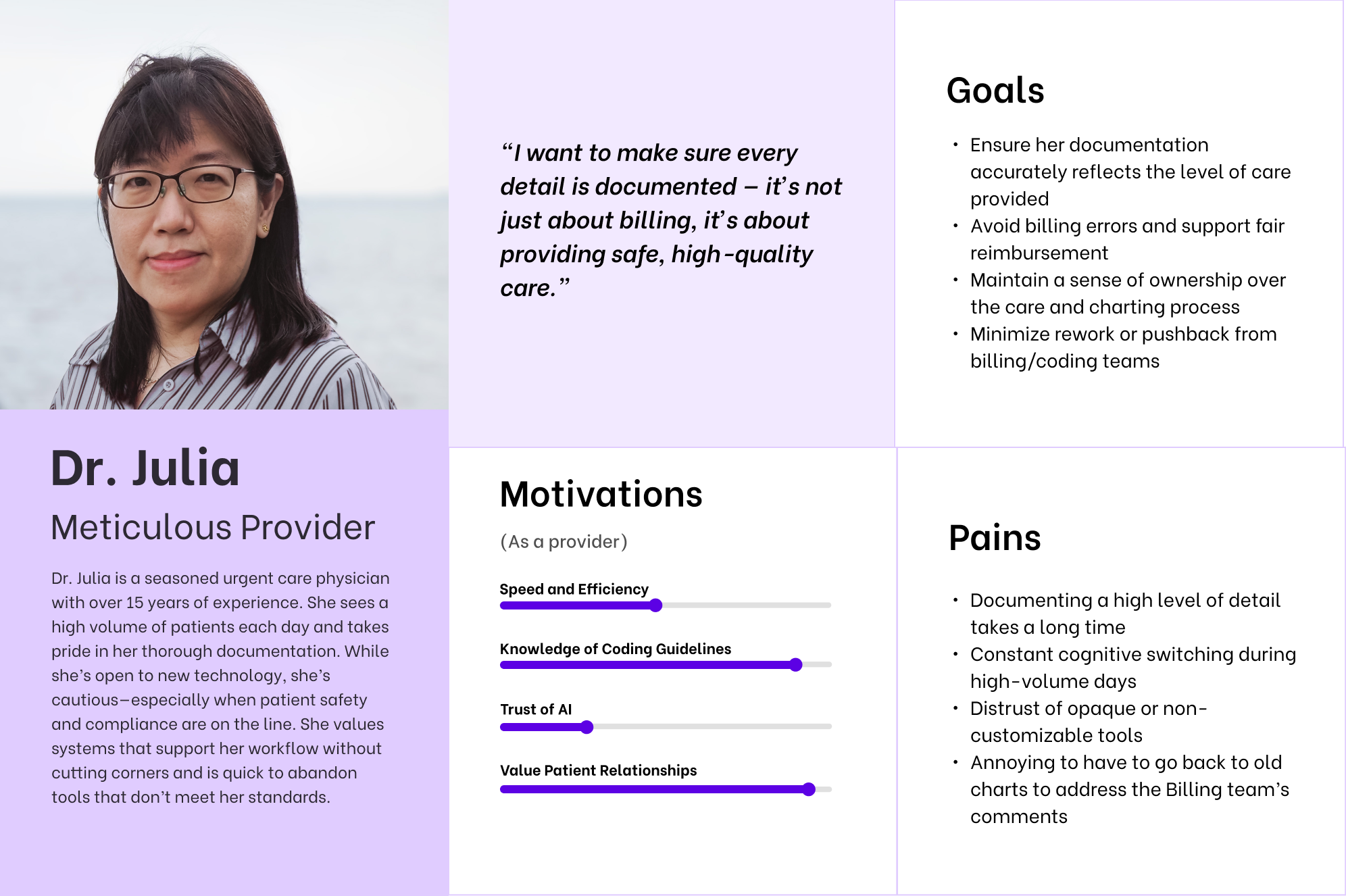

While uncovering these painpoints I noticed a difference in the needs of the providers. Some providers were more time-sensitive and wanted to get through the charting process as quickly as possible while others were more detail-oriented and wanted to ensure they were coding accurately. Based on these findings I created two personas for the primary user, the provider.

3. Interviews with Owners and Admins

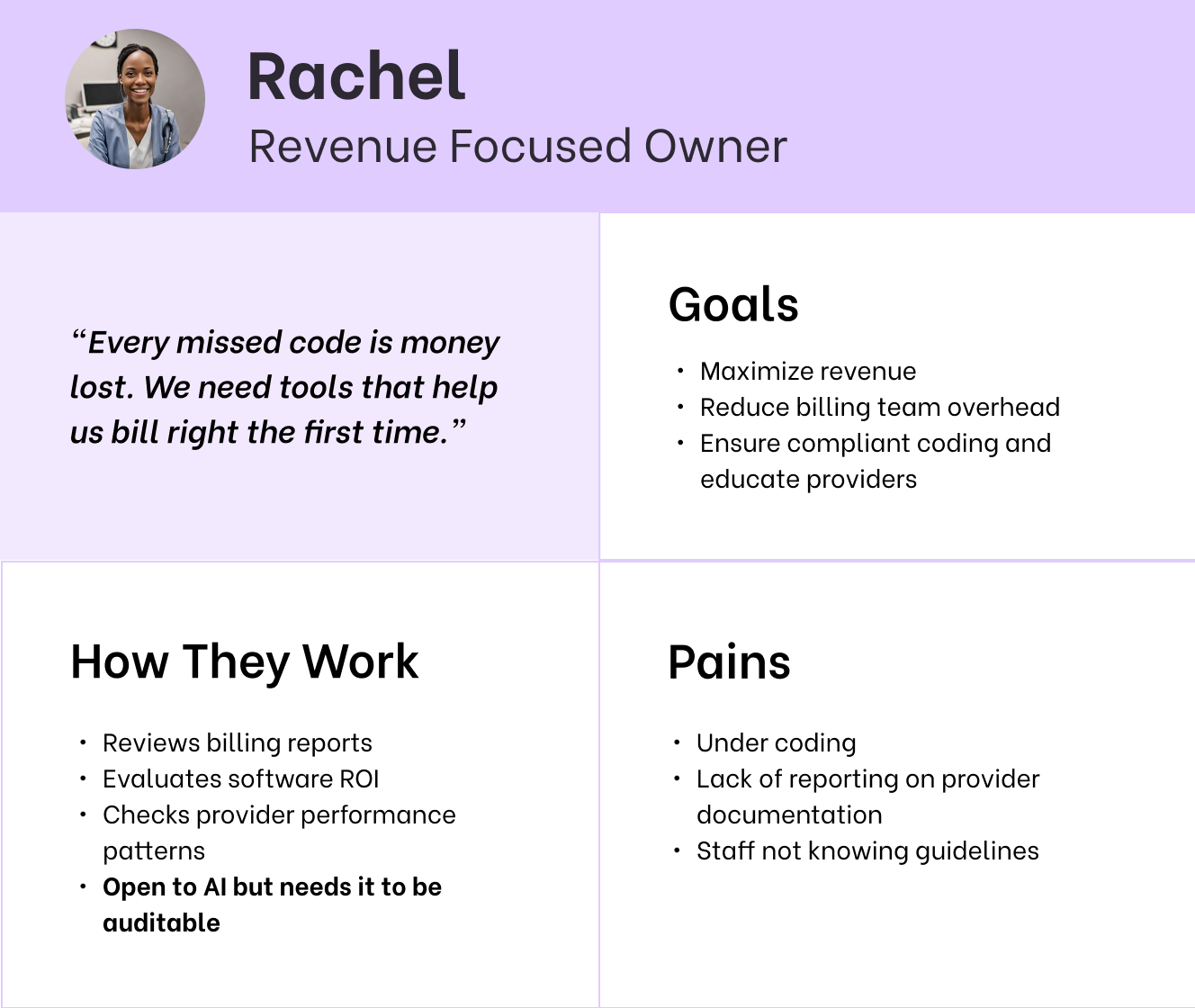

EM coding is integral for urgent care clinics so its important to note that there are 2 tertiary users also impacted by this project; the clinic owners and the clinic's billing team. I interviewed clinic owners and admin staff to understand their pain points:

- Frequent code changes post-submission, often due to missing documentation or mismatches between clinical notes and coded level

- Strong demand for more visibility into what triggered a code level, so the provider could easily adjust or justify it

- Lack of knowledge from some providers on how coding works, making it difficult to explain why certain charts need to be up coded or down coded

- Concerned about potential revenue loss from chronic under coding by providers who don't know the MDM guidelines

Based on these findings I created personas for the clinic owner and billing team.

4. Audit of Current Experience

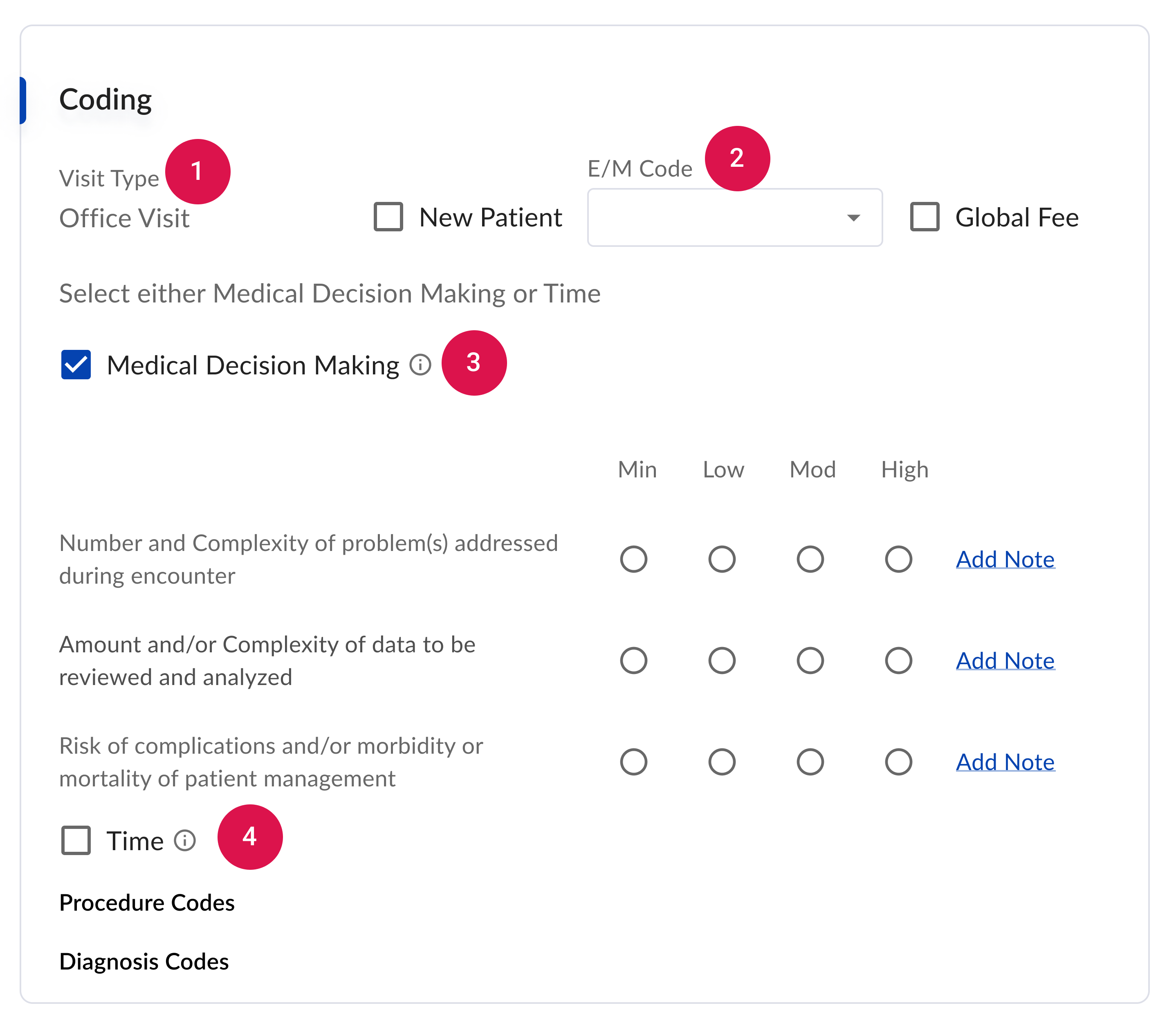

We had a rudimentary coding in place that didn't take into account how codes were calculated and was confusing for users. Summary of issues with the existing coding section:

- Providers and billers need to be able to update visit type in case there is a mistake.

- Visual hierarchy is unclear – E/M code is dependent on what is filled out under Medical Decision Making, so it doesn't make sense that it's at the top.

- E/M code is calculated on Time OR MDM, but here it looks like you can do both due to the checkboxes.

- Spacing is inconsistent and looks messy.

- Currently, they have to calculate E/M code manually even though we could do it for them based on what they select for MDM.

5. Competitive & Analogous Research

I also reviewed how other EMRs approached EM coding—ranging from simple dropdowns to rigid rules-based engines. One key missing element across all these solutions was any explanation or education about selecting appropriate EM codes.

Design Process

Restatement of the Problems to be Solved

After compiling all my research I compiled a more detailed list of project goals

The new EM Coding Design should:

- Reduce time and mental effort needed for correctly coding a chart

- Allow users to edit visit types

- Clean up visual hierarchy to make it clear which sections need to be filled out

- Reduce back and forth with billing by making it easier to code correctly

Initial Design Concepts

First, I explored multiple potential solutions through sketches.

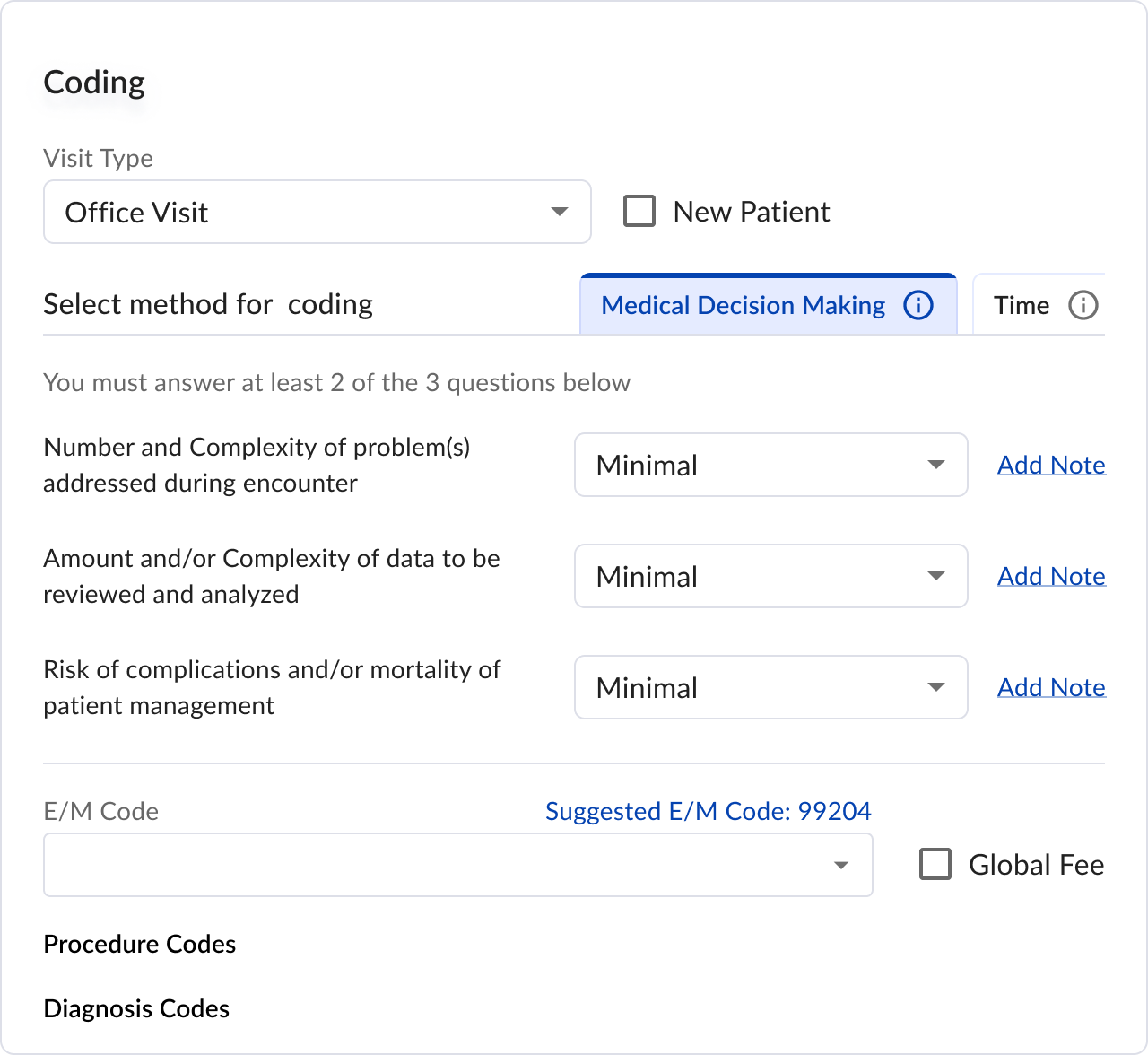

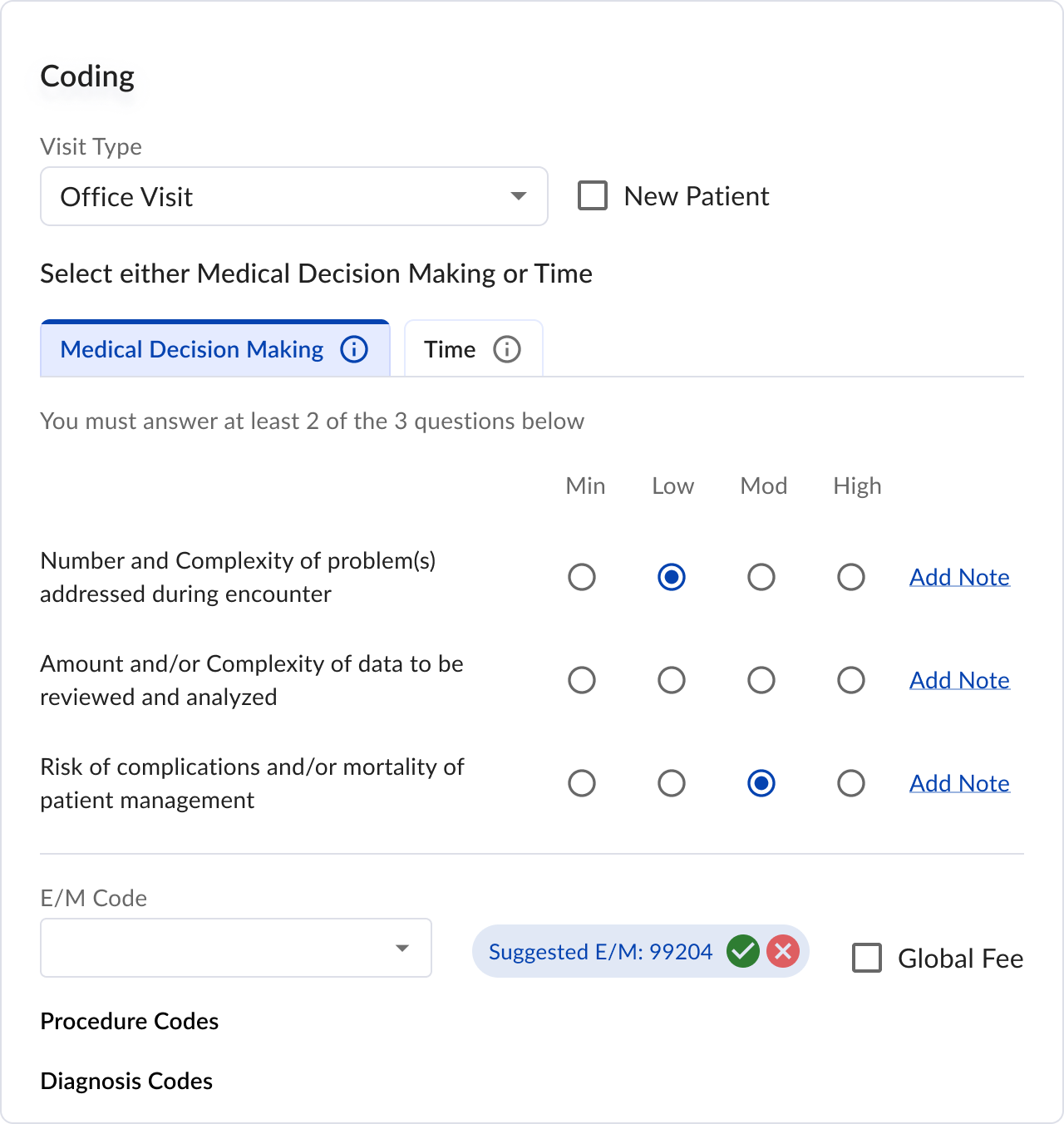

Option One: Reorganize into Tabs and Dropdown

This iteration focused on cleaning up the Coding section visually and reducing clutter. A simple rules engine would show users the recommended EM code based on their MDM level selections.

Pros- Conceals the less-used Time coding option

- Provides responsive dropdown interactions

- Calculates MDM automatically based on dropdown selections

- Additional clicks required for dropdowns

- Users must still manually select levels for each MDM component

Option Two: Keep Radio Buttons

This option explored alternative UI elements while maintaining similar functionality.

Pros- Hides the less common Time coding option

- Calculates MDM based on radio button selections

- Enables tracking through accept/reject functionality for suggested EM codes

- Interface shows too many options simultaneously

- Users still need to select MDM component levels manually

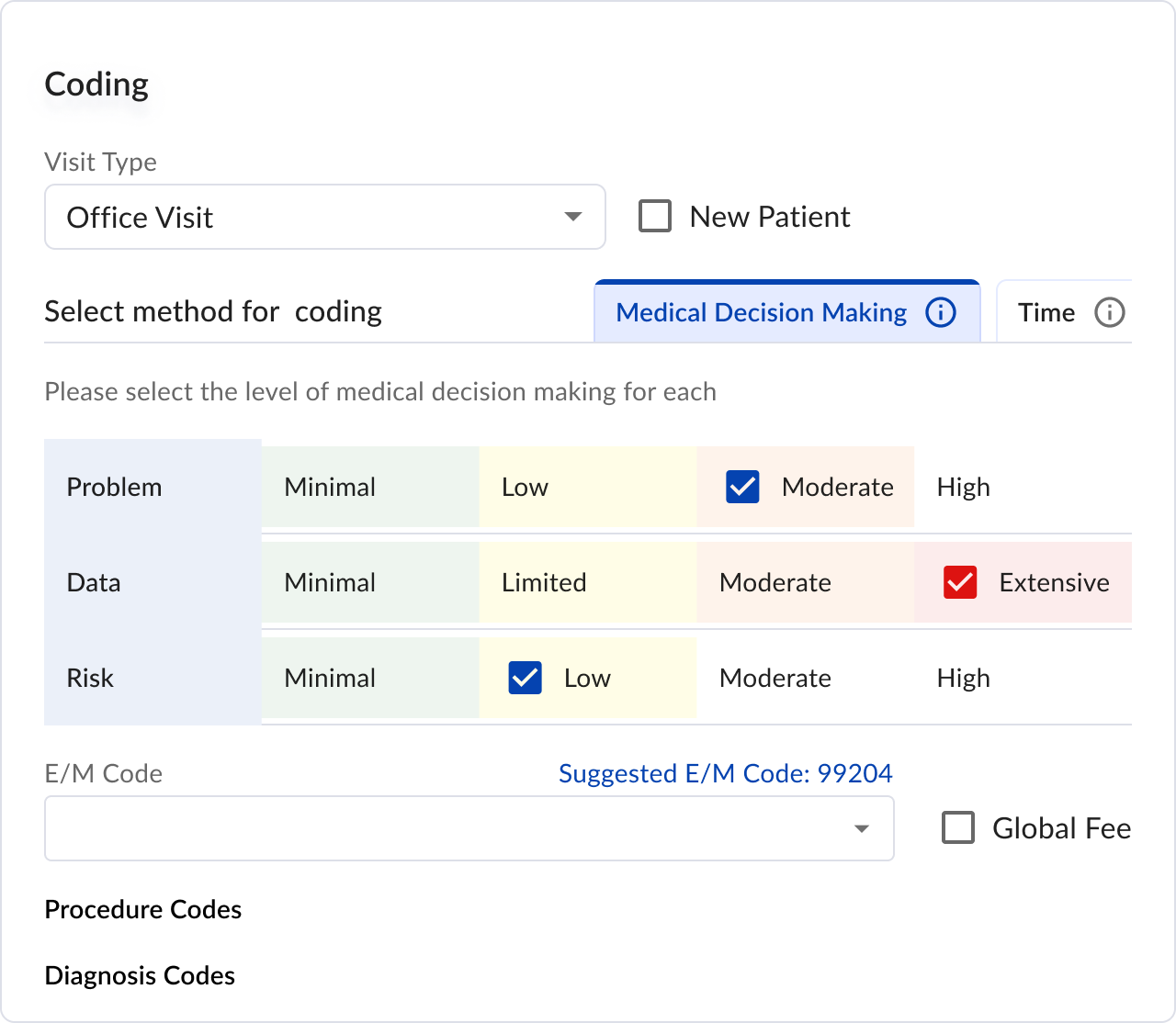

Option Three: Table View

Inspired by the MDM guidelines' clear examples, this design recreated their table format to enhance understanding.

Pros- Provides clear visual representation of appropriate levels

- May be less intuitive for users unfamiliar with the guidelines

- Lacks responsiveness

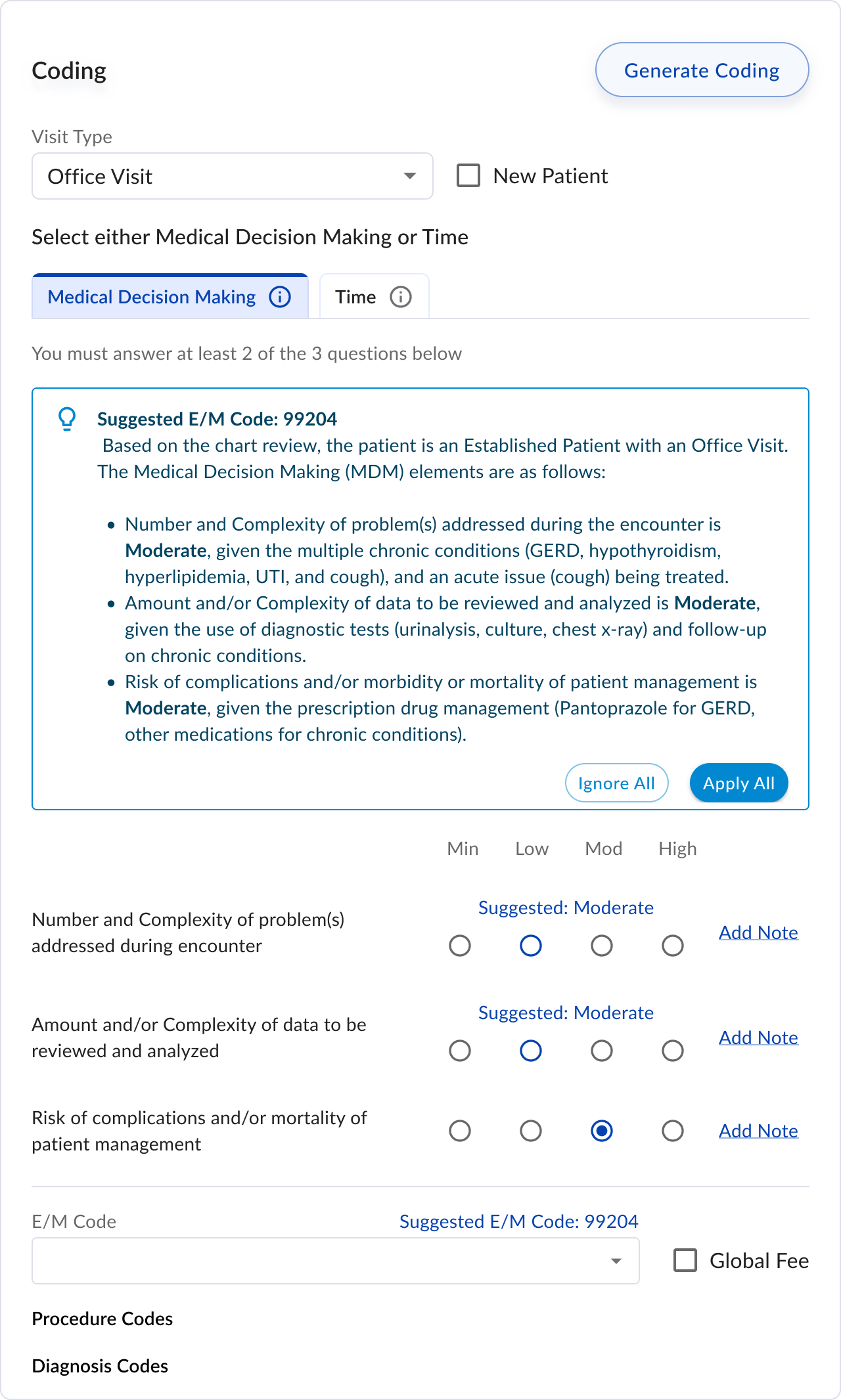

Option Four: AI Suggestion

My experience using ChatGPT to understand MDM guidelines sparked an idea: we could generate explanations to help users determine appropriate MDM levels, which would then inform the final code selection.

Pros- Simple radio button interface

- AI-powered education helps providers understand proper coding

- Requires significant development effort for AI integration

- Must ensure HIPAA compliance in AI suggestions

I shared these potential solutions with our users and advisors. Here's their key feedback:

- Insurance companies focus primarily on the final EM code, making provider input of MDM levels redundant.

- Since providers often struggle to determine MDM levels, requiring them to select levels creates unnecessary complexity.

- Clinic owners emphasized that providers must personally input the final EM code to maintain legal compliance.

- Providers responded positively to the AI assistant, provided they could review its reasoning and make adjustments.

- There was significant enthusiasm for expanding AI capabilities to include features like missed diagnosis detection.

Based on this feedback, I focused on refining the AI-powered education and the ability to review and adjust the final EM code.

Final Design

Key Decision 1: Which AI Model to Use

We experimented with different models like gpt-4, o1, claude, and preplexity by running de-identified charts along with a prompt with all the EM coding rules and seeing if the output matched as expected.

In the end we went with gpt-4 since it had the most consistent results and we were already using Chat-GPT in other areas of the application. We also decided to remove

Key Decision 2: Remove other options

Initially we wanted to still provide options to providers on how they can code their visit. We included an option to manually select the MDM and the ability to code using Time instead of medical decision making. However after speaking with our customers and advisors we had unanimous feedback that they would rather just have the one option with the AI assisted coding.

Results

We gave clinics the ability to toggle AI-assisted coding on or off. 90% of providers opted into using the feature, showing strong initial trust and perceived value. However, 10% preferred to code manually, citing a lack of confidence in AI recommendations.

To understand downstream impact, we spoke with billing teams. Feedback was mixed:

- 70% of billers felt the AI's recommendations were generally accurate and reduced charting back-and-forth.

- 30% noted that the AI occasionally upcoded visits, requiring extra review to avoid compliance risk.

Despite this, feedback on the UI was overwhelmingly positive. Users appreciated the simplicity of the interface and how it surfaced the recommended Medical Decision Making (MDM) level. One key request: show how to improve a chart, not just the final code—especially for edge cases or borderline levels.

Next Steps

- Experiment with newer models and refined prompts

To improve MDM accuracy and better capture nuance across encounter types.

- Build a feedback loop into the UI

Let providers and billers flag incorrect or unclear codes—enabling us to track performance and retrain more effectively.

Surface related procedures or diagnosis codes that may have been missed, helping ensure more complete and billable charts.